Like most people in the field of small molecule machine learning, we do our best to stay up-to-date with the latest research literature. Each month we find papers that tout sophisticated new architectures with claimed state-of-the-art-performance. But when we actually test the new approaches on data from real drug campaigns we usually come away disappointed with the results – and we’ve heard similar stories from other practitioners.

So we were excited to see Pat Walters’ recent blog post on the need for better benchmarks in ML for drug discovery. Walters lays out why existing ML benchmarks, such as those from MoleculeNet and Therapeutic Data Commons (TDC), don’t distinguish algorithms that are truly useful for drug discovery from those that aren’t. He advocates for the creation of large, uniform datasets of key endpoints, including ADME (absorption, distribution, metabolism, and excretion) properties like membrane permeability, microsomal stability, and aqueous solubility. We agree that these types of datasets will be a great asset to the research community.

However, we also believe that we don’t need to wait for the creation of these datasets to address several of the issues Walters highlights. We’ve found that, as messy as existing public data is, it’s possible to rigorously evaluate ML models by carefully selecting datasets, setting constraints on allowable training data, and using appropriate evaluation metrics.

In this post, we

- Describe our approach for constructing benchmarks from public data in a manner that aligns with the needs of decision making in hit-to-lead and lead optimization.

- Walk through a case study showing that models that score highly on commonly-used ML benchmarks can have almost zero predictive ability within the data of historical drug discovery programs.

We believe that these results go a long way toward explaining the large gap between how well ML models appear to work in the literature and how well they’re seen to work by practicing medicinal and computational chemists. We hope they help push the field towards more stringent and realistic (but achievable) benchmarking approaches.

Part 1: Our approach to benchmarking

First decide what real-world problem the benchmark is approximating

Before we even begin thinking about constructing a benchmark, it’s important to answer the question: what real world problem is this approximating? In this post, we’ll focus on creating ADME models for hit-to-lead and lead optimization, where chemists are trying to fine-tune a chemotype or make scaffold hops to improve a compound’s properties. This creates a different approach to evaluation than, say, if we were focused on hit finding, where we might be looking for models that can find a “needle in a haystack” among diverse compounds. The most useful ADME model is a model that (1) can work off-the-shelf to help guide compound optimization and idea prioritization from “day 1” of a project, and (2) can be fine-tuned to become even more effective as the project progresses and ADME measurements are collected.

The iterative nature of fine-tuning to a project makes it a particularly hard problem for public data benchmarking (though see efforts like FS-Mol), so we’ve focused on assessing “day 1” utility. Our goal is to evaluate whether ML models are likely to helpfully guide decisions in a drug discovery campaign without additional retraining, even if the compounds in the program don’t closely resemble the model’s training data.

With this in mind, we propose the following guidelines for building benchmark datasets and evaluating algorithms on them.

Step 1: Assemble benchmark data from high-quality assays that represent real drug programs.

- Identify high-quality public datasets for the property of interest. These individual assays (following the terminology of ChEMBL) can be relatively small, e.g. 30 or so compounds each.

- Ensure all assay protocols are appropriate and collect assay metadata to enable inter-conversion of assay results where possible.

Step 2: Train models with data that excludes compounds similar to the benchmark data.

Step 3: Evaluate models on the benchmark using assay-stratified correlation metrics.

- Generate predictions for the benchmark data and then evaluate each model by calculating performance metrics on each individual assay and averaging the results.

- As a top-line performance metric, use one that captures the model’s ability to help guide choices among alternatives, such as Spearman rank correlation.

We expand on each of these steps in more detail below, including the motivation for each of them.

Step 1: Assemble benchmark data from high-quality assays that represent real drug programs

Databases like ChEMBL and PubChem contain a large amount of ADME data, often deposited in conjunction with a journal publication and representing a subset of data collected from a drug discovery program. While this data has limitations, which we discuss later on, it is nonetheless a rich resource for understanding the ADME landscape of historical programs and estimating how ML models would perform in these programs. To gain value from this data, careful curation to deal with the inconsistent experimental protocols across assays is key.

The most critical curation step is to ensure that all included assays are measuring the same underlying biological and chemical property. Collaboration with chemists and DMPK scientists is required to get this right. Some differences across protocols may be relatively immaterial, allowing data from different protocols to be used in the same benchmark. But other differences are critical and require data exclusion. For example, the ChEMBL-deposited HLM data from this paper was measured in the absence of the critical cofactor NADPH, and the ChEMBL-deposited MDCK-MDR1 permeability data from this paper was run in the presence of a P-gp inhibitor. Both would need to be excluded from benchmarking datasets for standard models of their respective properties.

After ensuring that all identified assays are measuring the same underlying property, collecting additional metadata can be useful to enable harmonization of assay results. For example, metadata like initial compound concentrations or incubation times might be needed to interconvert results. While completely harmonized data across assays in the benchmark isn’t strictly necessary for the evaluation approach we describe, it can be very helpful for deeper analyses.

Finally, as laid out clearly in Walters’ blog post, it’s important to ensure that structures in the curated assays are accurate and unambiguous. Incorrect structures should be removed, and stereochemistry should be handled with care.

Step 2: Train models with data that excludes compounds similar to your test set

Our benchmark development approach is different from MoleculeNet and TDC in that it specifies test data, but not training data. This represents a data-centric approach in which the development of a training set is a focus of research and experimentation along with the choice of learning algorithm. We’ve seen in our own work that changes to how training data is curated can have a large impact on performance.

With the flexibility to select training data, it’s important to ensure that the trained models are generalizing outside of their training data and can perform well across many programs, not just those that happen to have similar chemical matter to training compounds. A common methodology for ensuring generalization in existing benchmarks is scaffold splitting, which only allows a pair of molecules to appear across the train and test set if at least one atom differs in the rings and linkers of the molecule. However, as we’ll see in the case study below, scaffold splitting can still allow highly similar molecules in the train and test sets.

Rather than scaffold splitting, we recommend using molecular fingerprints and a similarity measure, such as Tanimoto similarity of ECFP4 fingerprints, to exclude all training compounds that have high similarity (e.g. ≥ 0.5) to a test compound. While no approach to evaluating similarity is perfect, we’ve found this approach to provide a more rigorous guarantee that models are truly generalizing to the test set.

Step 3: Evaluate models on the benchmark using assay-stratified correlation metrics

Once models have been trained and predictions have been produced for the benchmark compounds, it’s valuable to have a shared “top line” evaluation approach, though supplemental analyses and metrics are often useful.

Our proposed approach has two components:

- Calculate metrics stratified by assay, and then average the results, rather than calculating metrics using a single combined dataset.

- Focus on metrics that capture the model’s ability to prioritize among alternatives, such as Spearman or Pearson correlation.

Calculate assay-stratified metrics to avoid Simpson’s Paradox

The first component is motivated by the use case of the hit-to-lead and lead optimization phases of drug discovery, where ADME properties become a key focus. In this setting, drug hunters generally are trying to modify hits and leads to identify similar molecules with improved ADME properties. This means that a useful model needs to be able to distinguish molecules with better and worse properties within a local chemical space, rather than across a more global chemical space. By evaluating metrics within individual assays, which are often series of similar compounds from published drug campaigns (as we’ll see confirmed below), we better evaluate how a model does within local chemical spaces.

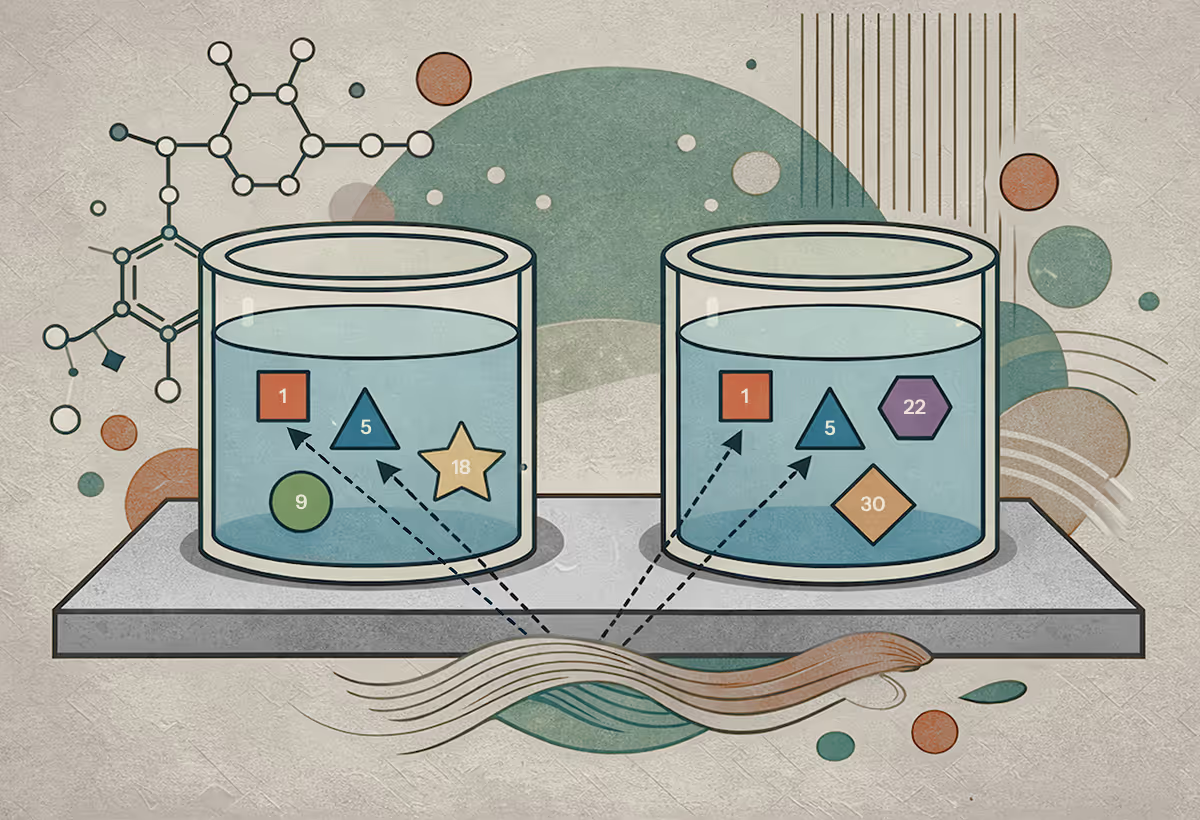

Evaluating within rather than across assays is particularly important because statistics measured in a pooled group versus in subgroups can diverge dramatically, a phenomenon known as Simpson’s Paradox. We can see a stylized example of how this can play out in drug discovery in the figures below. On the left, the relation between ML-predicted clearance and measured clearance in this hypothetical dataset appears strong; the Spearman R is ~0.72. But suppose the data is composed of three programs, shown on the right. The correlations within the 3 programs are all near zero, meaning this model would be nearly useless to help a chemist optimize clearance within each program. As we’ll see in the case study, this situation isn’t just theoretical—it occurs in practice as well.

Focus on correlation metrics that measure a model’s ability to point chemists in the right direction

Our second component to evaluation is again motivated by the needs of hit-to-lead and lead optimization. While chemists ultimately want ADME properties to fall within specific absolute ranges, our experience is that day-to-day, chemists need ADME models to help make prioritization decisions; in other words, to take 20 ideas of possible compounds to synthesize and say “these three look most promising.” Correlation metrics are well designed to capture this notion.

Below, we can see a stylized example of two models making predictions for the same set of measured values in a program. On the left, the model has good correlation but is “miscalibrated,” with consistent over-estimation; there is a strong relationship between predicted and actual values (Spearman R ~0.8), but a large mean absolute error (~5.0) and very poor R2 (~-5.9). On the right, the model is uninformative; the Spearman R is near zero but there is lower mean absolute error (~2.4) and a better, though still poor, R2 (~-0.5). The model on the left can still be used to effectively prioritize compounds, despite its flaws, while the one on the right cannot. In this case, correlation metrics point us towards the most useful model, while MAE and R2 do not.

In an ideal world, a model has both strong correlation and low error, and there are definitely issues caused by a miscalibrated model. But a miscalibrated model can still be highly useful and is often fixable via a linear re-calibration after a few ADME data points in a series are collected. An uninformative model, in contrast, even if it has lower error, is useless and cannot be easily corrected.

Part 2: A case study with HLM stability

So how different is the picture of ML model performance created through this alternative benchmarking approach versus existing benchmarks? To find out, we’ll compare two different benchmark datasets for human liver microsomal stability (HLM), a key property in the hit-to-lead and lead optimization phases of drug discovery.

- The HLM dataset from the TDC ADME group, originally released by AstraZeneca and divided by TDC into a train set of 881 compounds and a test set of 221 compounds using scaffold splitting.

- The ChEMBL assays curated and harmonized by Aliagas et al (2015), containing 972 compounds collected from 27 distinct assays. This is an excellent example of the type of curation effort we propose for benchmark development.

We’ll take a look at the important ways the compounds in these benchmarks differ, then train models on the TDC training set and evaluate them against (1) the TDC test set and (2) the Aliagas dataset using our assay-level evaluation approach.

Data exploration: The compound distribution of standard benchmarks can cause misleading results

In the figure below (repeated from the top of this post), we’ve plotted a t-SNE embedding of the compounds in the two benchmark test sets, each superimposed on the TDC train set. The TDC test set shows scattered points without much clustering, and the points are “on top of” the training set, while the Aliagas test set has clear clusters, many far from the TDC training set.

Deeper dive on the TDC test set

As we can see in the t-SNE embedding, the TDC test set represents diverse chemotypes. We find that each compound in the test set has an average of just 5 similar compounds (ECFP4 Tanimoto similarity ≥ 0.5) from among the 221 total compounds. The high diversity of the set means it may be suitable as a measure of performance for virtual screening, but not for the hit-to-lead and lead optimization phase of projects where the diversity of compounds is likely to be far narrower.

As we can also see in the embedding, the compounds in the TDC test set are quite similar to the TDC train set. More than half of test compounds have at least one similar compound in the training set. Often, the degree of similarity is striking. For example, the most similar train-test pair differs by a single carbon atom added to a piperazine ring. Single-atom changes can and do sometimes cause large shifts in activity, but the high level of similarity still calls into question the use of scaffold splitting for ensuring generalization to novel chemical space.

Deeper dive on the Aliagas test set

We can see in the t-SNE embedding for the Aliagas dataset that the compounds are clustered based on the 27 assays from which they are sourced. There are almost no similar pairs of compounds across assays, but each compound has an average of 25 similar compounds within its own assay. This means the Aliagas dataset provides a challenge of distinguishing the stability of compounds within 27 different local regions of chemical space, approximating the kind of challenge frequently encountered in drug discovery. The Aliagas compounds also tend to be dissimilar from the TDC train set, and we can make sure that this is the case by removing any similar TDC compounds prior to training.

TDC test set evaluation: ML model performance appears high on a standard benchmark

We’ll evaluate three representative models on the TDC test set:

- Support Vector Regression (SVR) using ECFP4 fingerprints as features, trained on the TDC train set.

- ChemProp, a message passing neural network architecture, trained on the TDC train set.

- Crippen cLogP, a simple and commonly-used predictor of lipophilicity often used by chemists as a predictor of higher metabolic clearance.

Spearman R over the full test set is shown for the three models along with bootstrapped 95% confidence intervals. The SVR and ChemProp results are roughly in line with the top results in the current leaderboard. The cLogP model has almost no predictive ability.

Based on this benchmark, it looks like both ML models are effective predictors of HLM for a diverse range of chemical space. But we’ll see how this story changes when we evaluate using our benchmarking methodology on the Aliagas dataset.

Aliagas dataset evaluation: A use-case-specific benchmarking approach shows very different results

To evaluate the models on the Aliagas dataset, we removed 70 compounds in the TDC training set that were similar to at least one Aliagas compound and retrained the SVR and ChemProp models. In addition to SVR, ChemProp, and cLogP, we can also evaluate the SVR model reported in the Aliagas paper that was trained on about 20,000 internal Genentech data points. We’ve labeled this model GeneSVR. For this model the paper reports that 92% of the test compounds had Tanimoto similarity to the training dataset lower than 0.5.

We’ll first show results on a more traditional pooled evaluation approach, calculating Spearman R over all the data with bootstrapped 95% confidence intervals, before showing our alternative assay-stratified approach. In the pooled results, the performance of SVR and ChemProp drop by about 0.2 Spearman R. This suggests that the models’ apparent performance on the TDC test set is inflated by the high similarity between training and test compounds. The Genentech model, trained on the largest dataset (but with some potential for train/test chemotype overlap), performs the best at about 0.48 Spearman R, and the cLogP model performs a bit better than before, at 0.15 Spearman R.

Finally, when we switch our analysis to an assay-stratified level, the story of model performance shifts once again. The results below show Spearman R calculated within each of the 27 assays and then averaged, with a 95% confidence interval and with individual assay-level results shown as smaller blue points. Calculated this way, the performance of all of the ML models drops, with SVR dropping most precipitously. Interestingly, the performance of cLogP actually increases, from 0.15 Spearman R to 0.22 Spearman R. The GeneSVR model performs best, but its predictive ability is still lower at 0.32 Spearman R. All four tested models have assay-level performance that is above 0 at the 0.05 significance level (by 1-sample T-test), but no model performs significantly better or worse than the cLogP model at the 0.05 significance level (by paired T-test).

The biggest takeaway from this analysis is the stark difference in apparent model performance in the assay-stratified Aliagas benchmark evaluation versus the TDC test set evaluation. This makes clear how big the gap is between how well models seem to perform on existing benchmarks and how useful they are likely to be in practice in a specific drug discovery program. In the most extreme difference, SVR, which appeared highly predictive across a diverse chemical space in the TDC benchmark, was revealed to have close to zero predictive ability when applied to datasets from particular past drug campaigns. When embarking on a campaign in a new region of chemical space, chemists would on average do just as well, and perhaps better, using cLogP as a guide to microsomal clearance than either of the TDC-trained models.

Conclusions and moving forward

In this post we’ve walked through a method of benchmarking ML models’ ability to predict ADME properties within diverse chemical series, argued that this method better reflects real-world use cases, and shown how the performance of models trained and evaluated on a standard benchmark looked remarkably different when re-evaluated with this alternative approach.

Our currently described benchmarking methodology does have some limitations. Most notably, it assumes that assays curated from public databases are accurate proxies for the sets of compounds measured in drug discovery campaigns. But it’s well known that the measurements made public with a publication generally represent only a fraction of compounds truly tested, and this fraction may not be representative. Furthermore, some ADME assays in public databases may not represent part of a drug development campaign at all. This is another area where future public data releases could be helpful. If data from past campaigns can be released in a manner that preserves the groupings of compounds into programs (and better yet, the programs’ time courses), this will make these datasets particularly useful for evaluating the usefulness of ADME models.

And finally, while benchmarks are essential for the development of the field, the ultimate test of an ML model is how well it can predict measurements and guide decisions in the setting of an actual in-progress drug discovery campaign. We will focus on evaluating models in this setting in a follow-up post.

If ML is to truly accelerate drug discovery, it’s important to see more datasets curated with an approach that approximates real-world use of ML models and to see models evaluated in ways that measure how well they generalize to new regions of chemical space. Without a clear connection between benchmarks and use cases, benchmarks risk becoming toy datasets that drive ML methods development to move orthogonally to practical impact. We’re excited to see how new approaches to benchmarking will push the field to innovate in algorithm development and in the curation and combination of training data. Ultimately, we believe that ML models can be a powerful tool for both ADME optimization and many other parts of the drug discovery cycle and that better benchmarking and evaluation methodology will be a key to their success.

Code availability

The code used for this post is available here.

Acknowledgements

We thank Pat Walters, Katrina Lexa, Melissa Landon, Josh Haimson, and Patrick Maher for helpful feedback on drafts of this post.